Neousys Ruggedized AI Inference Platform Supporting NVIDIA Tesla and Intel 8th-Gen Core i Processor - CoastIPC

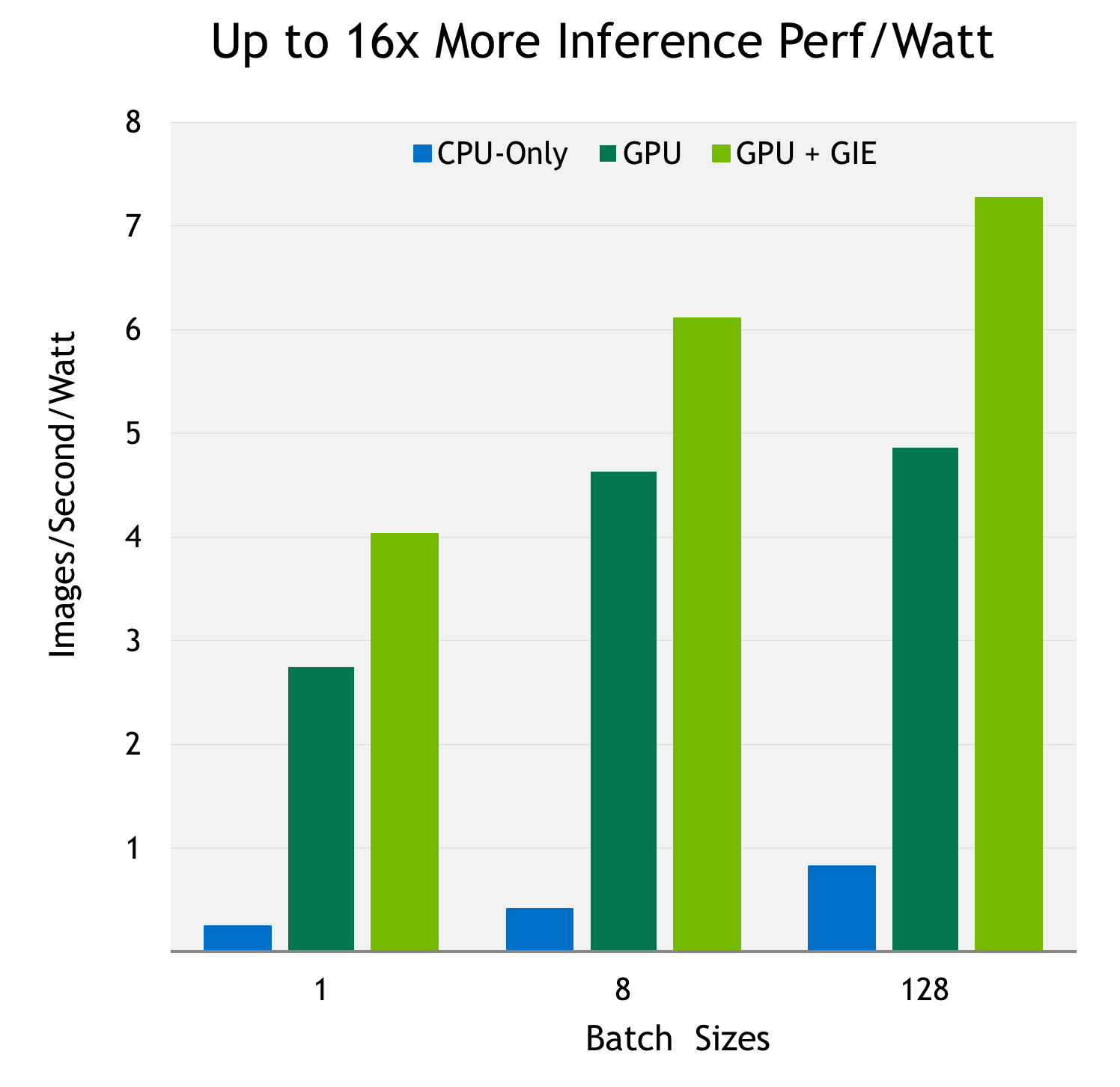

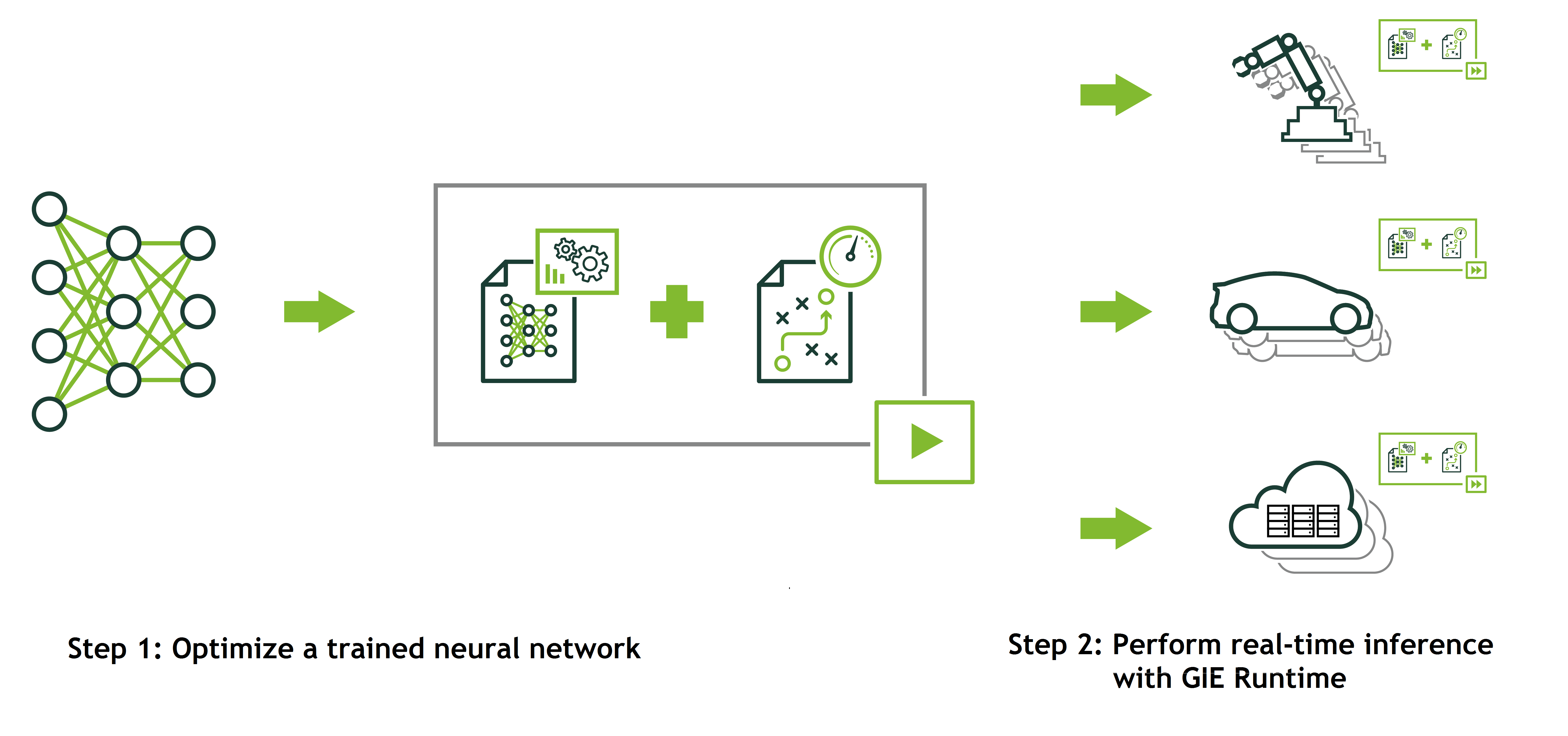

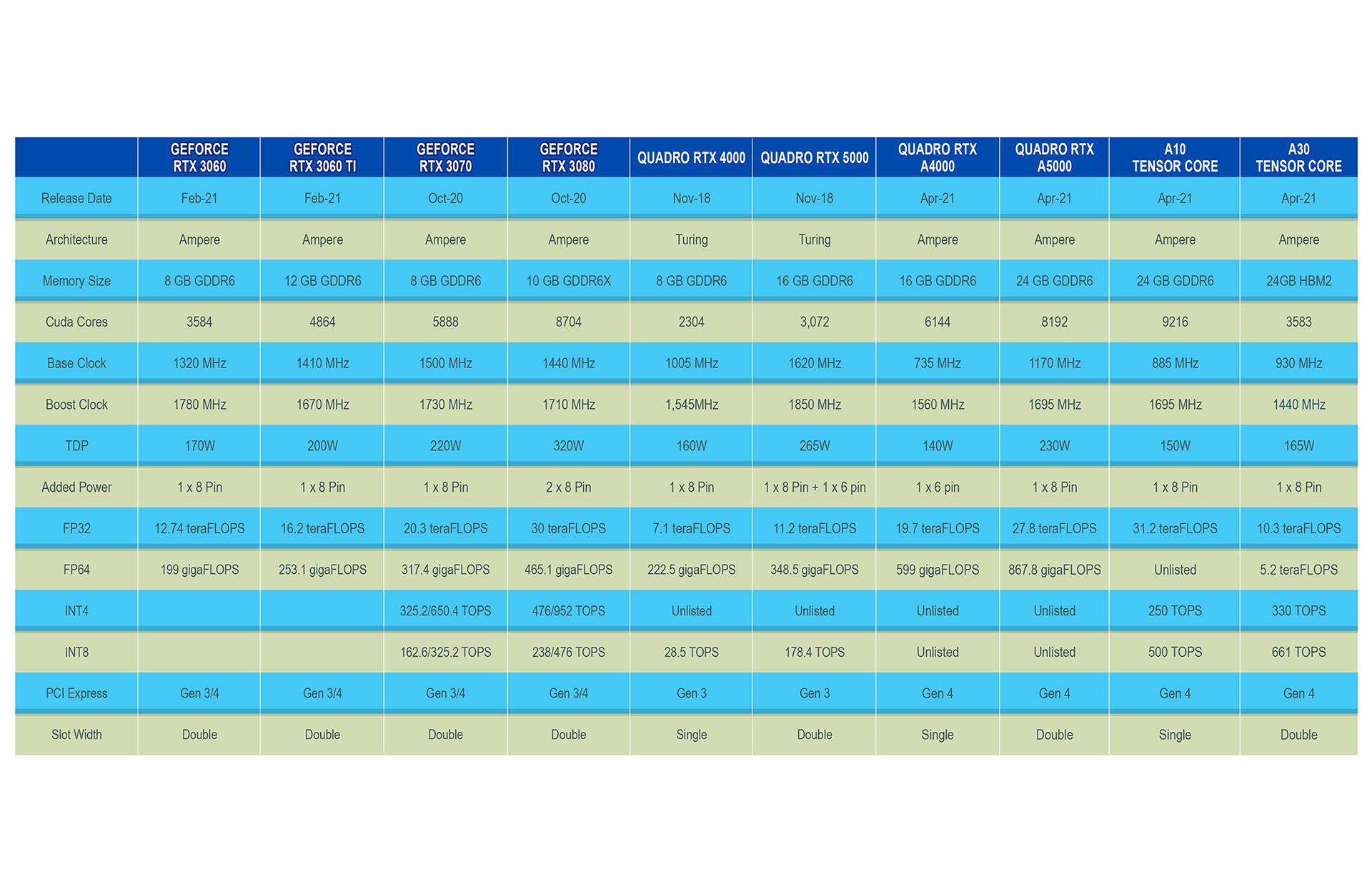

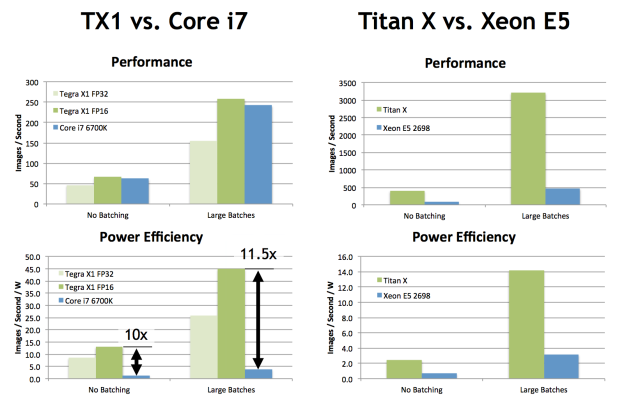

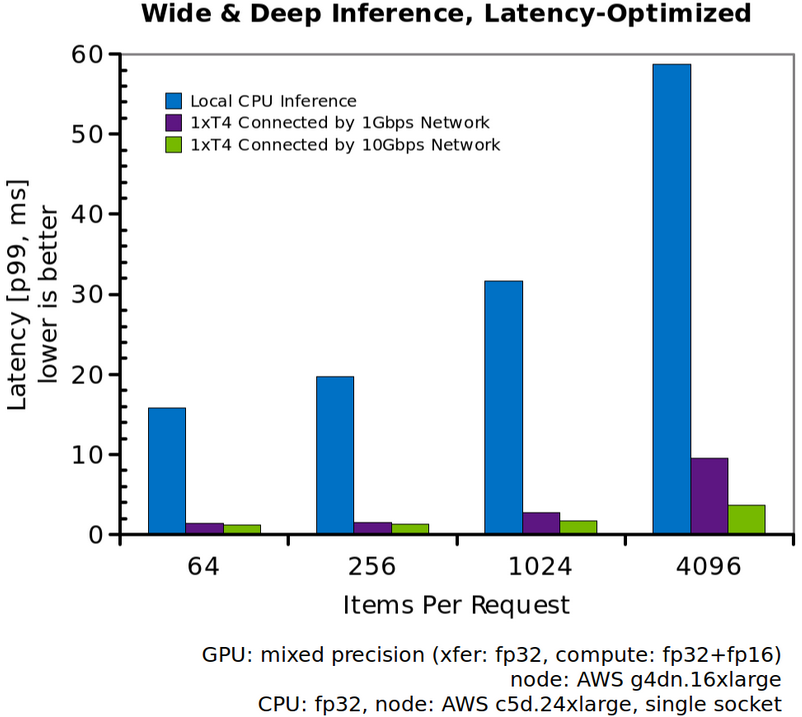

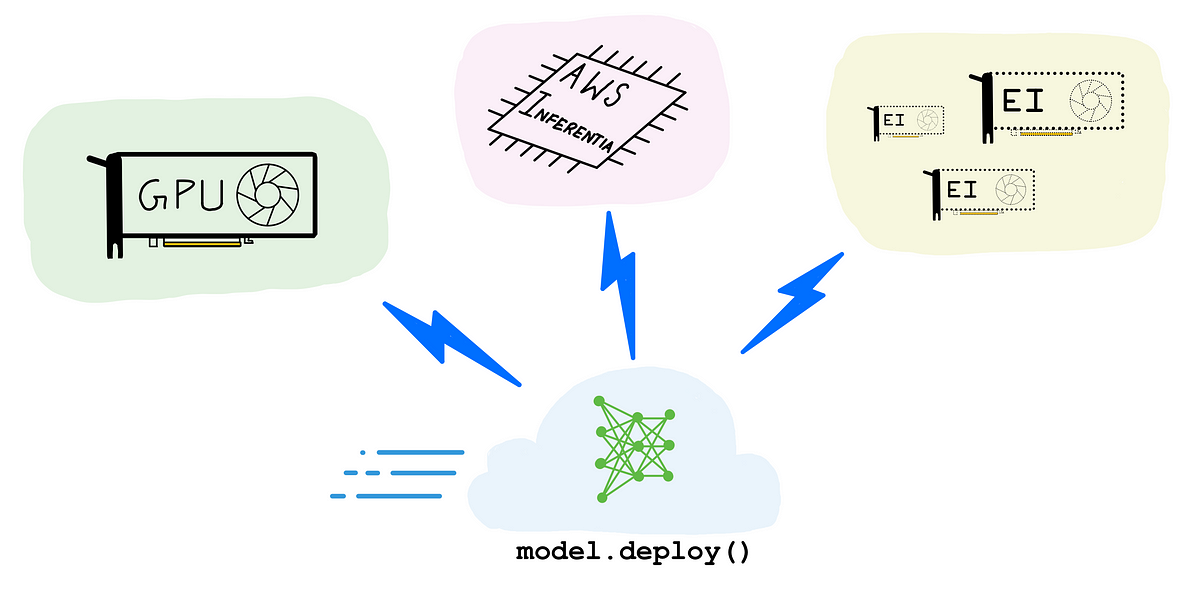

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science

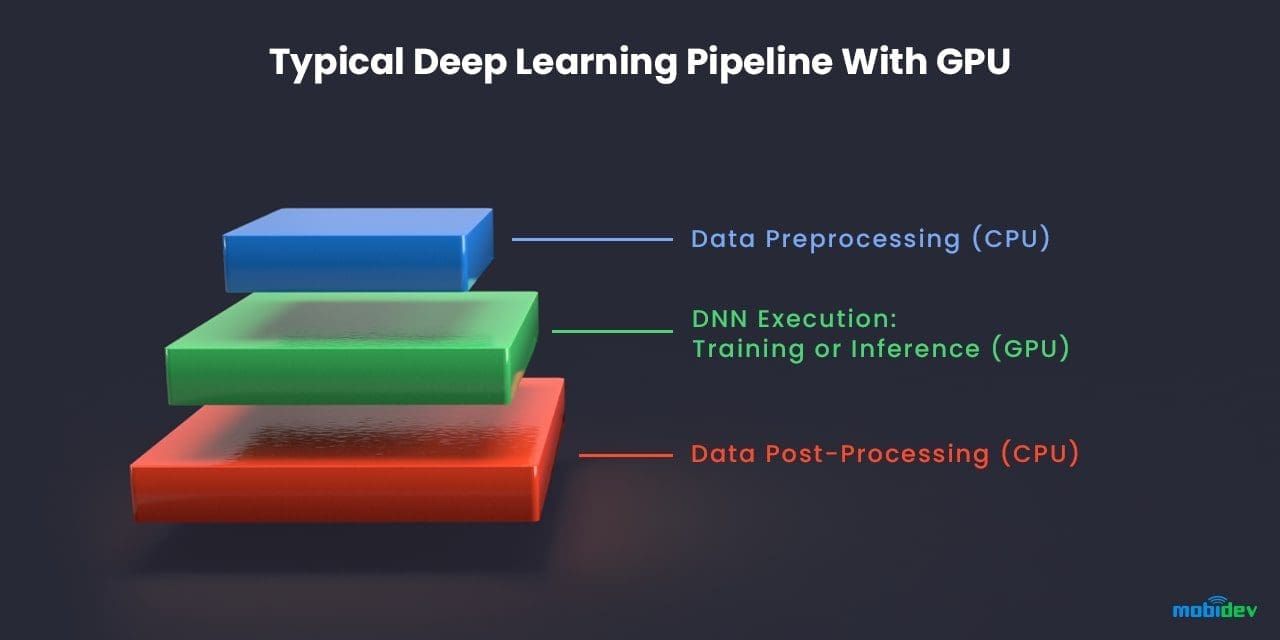

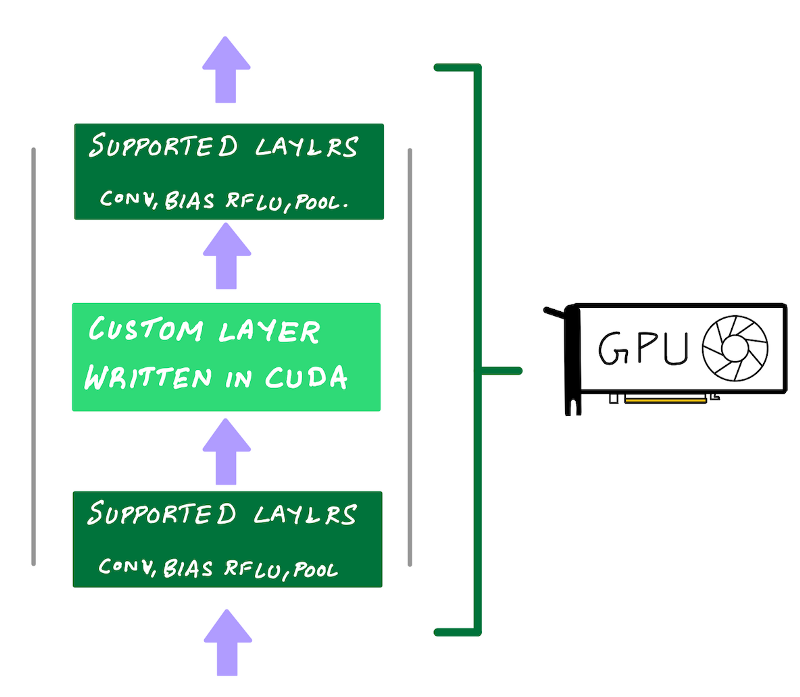

Optimize NVIDIA GPU performance for efficient model inference | by Qianlin Liang | Towards Data Science

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science

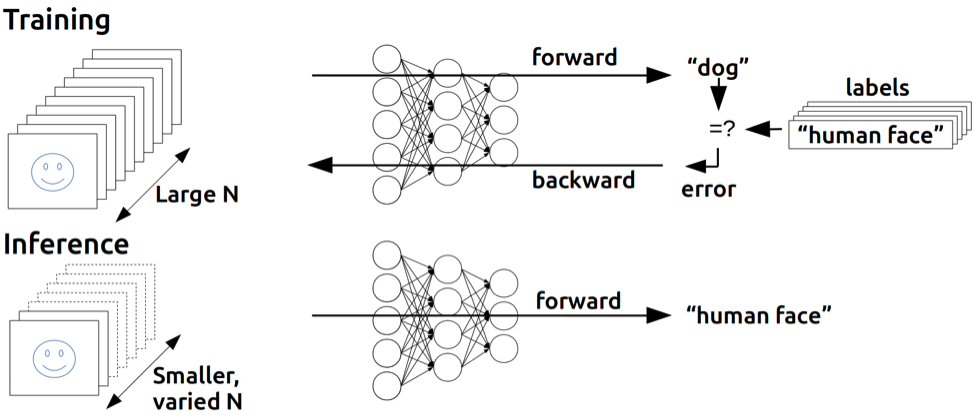

The performance of training and inference relative to the training time... | Download Scientific Diagram

GPU-Accelerated Inference for Kubernetes with the NVIDIA TensorRT Inference Server and Kubeflow | by Ankit Bahuguna | kubeflow | Medium